My last article on implementing fast interpreters was posted to both Hacker News and the Programming subreddit. Commenters had some valid questions and pointed out some related work. This post aims to address some of the comments and to collect the related work mentioned in the HN and Reddit comments and some more.

Why not write a simple JIT?

The main benefit of an interpreter usually is simplicity and portability. Writing the interpreter in assembly makes it a bit harder to write and you lose portability. So, if we have to go down to the level of assembly, why not write a simple template-based code generator? Wouldn’t that be faster? The answer is: it depends.

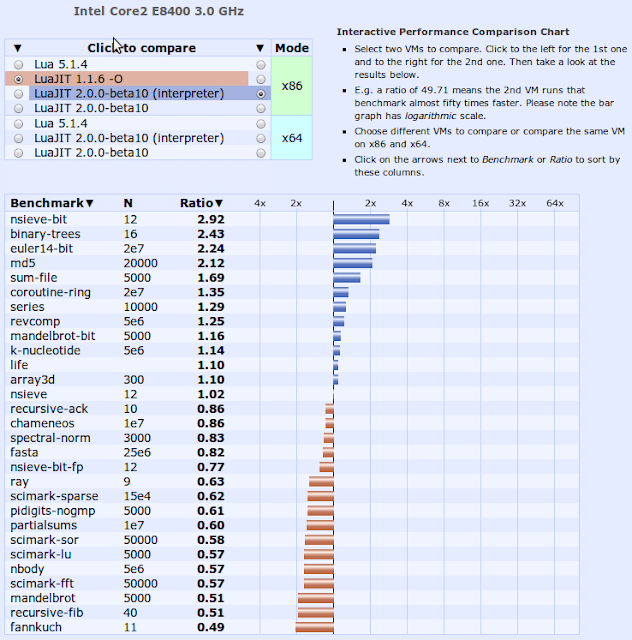

As Mike Pall (author of LuaJIT) pointed out, LuaJIT v1 was based on a simple JIT and does not consistently beat the LuaJIT v2 interpreter (which is written in assembly).

A JIT compiler (even a simple one) needs to:

Manage additional memory. If the code is short-lived (e.g., there is another optimisation level) then unused memory must be reclaimed and fragmentation may become an issue.

Mark that memory as executable (possibly requiring flushing the instruction cache or operating system calls).

Manage the transition between compiled code segments. E.g., we need a mapping from bytecode targets to corresponding machine code. If the target hasn’t been compiled, yet, this can be done on-demand and the branch instruction can be updated to directly jump to the target. If the target may ever change in the future, we also need a way to locate all the branches to it in order to invalidate them if necessary.

If the interpreter has multiple execution modes (e.g., a trace-based JIT has a special recoding mode which is only used for a short time), then code needs to be generated for each execution mode.

All this is quite a bit more complicated than a pure interpreter, even one written in assembler. Whether it’s a good reason to take on this complexity depends on how high the interpreter overhead actually is. If the bytecode format is under our control then we can optimise it for our interpreter as done in LuaJIT 2. This isn’t always the case, though. The DynamoRIO system is a runtime instrumentation framework for x86. The x86 instruction format is very complex and thus expensive to decode. DynamoRIO therefore does not use an interpreter and instead decodes the host program into basic blocks. (No code generation is really needed, because DynamoRIO emulates x86 on x86, but you could imagine using the same technique to emulate x86 on, say, ARM.) The challenges (and solutions) for this approach are discussed in Derek Bruening’s excellent PhD thesis on DynamoRIO. Bebenita et al. describe an interpreter-less JIT-compiler design for a Java VM in their paper “Trace-Based Compilation in Execution Environments without Interpreters” (PDF).

DSLs for generating interpreters

The PyPy project generates a C interpreter and a trace-based JIT based on a description in RPython. “Restricted Python”, a language with Python-like syntax, but C-with-type-inference like semantics. Laurence Tratt described his experiences using PyPy in his article “Fast Enough VMs in ast Enough Time”. PyPy’s toolchain makes many design decisions for you; its usefulness therefore depends on whether these decisions align with your goals. The PyPy Status Blog contains many examples of language VMs written in PyPy (e.g. PHP, BF, regular expressions).

A DSL aimed at developing a fast assembly interpreter can still be useful for other purposes. One problem in implementing a JIT compiler is ensuring that the semantics of the compiled code match the semantics of the interpreter (or baseline compiler). By having a slightly higher-level abstraction it could become possible to use the same specification to both generate the interpreter and parts of the compiler. For example, in a trace-based JIT, the code for recording and interpreting looks very similar:

Interpreter: Trace Recorder:

mov tmp, [BASE + 8 * RB] Ref r1 = loadSlot(ins->b);

Ref r2 = loadSlot(ins->c);

add tmp, [BASE + 8 * RC] Ref r3 = emit(IR_ADD_INT, r1, r2);

mov [BASE + 8 * RA], tmp writeSlot(ins->a, r3);If we treat the trace recorder as just another architecture, it may save us a lot of maintenance effort later on.

Further Reading

There are many other resources on this topic, but here’s a short selection of interesting articles and papers on the topic.

Mike Pall on why compilers are having such a hard time optimising bytecode interpreters.

Mike Pall explains some important features of LuaJIT’s ARM interpreter. On x86 LuaJIT uses SSE instructions which are no longer costly on modern desktop processors. On ARM fast floating point support is not guaranteed, so now the interpreter has to efficiently support two number types (integer and float).

A longer article explaining a direct-threaded interpreter in C.

The CPython interpreter uses direct threading if possible. It has an interesting note on how to stop GCC from unoptimising direct-threaded code. If we’re not careful, GCC (at least in some versions) may decide to “optimise” direct threaded code to remove duplicated code which however ends up decreasing branch prediction accuracy and thus performance.

Context threading is a technique that uses a small amount of code generation to expose interpreter jumps to the hardware branch predictor thus eliminating most overhead due to branch prediction failures. Also check out Mathew Zaleski’s other publications on related topics.

Wingolog did an article on WebKits assembly-based interpreter and the Ruby-based DSL to generate it. Also check out Wingo’s articles on V8.

There’s quite a large set of optimisations that can be done for purely interpreter-based implementations. Stefan Brunthaler’s PhD thesis “Purely Interpretative Optimizations” (PDF) discusses these.

VMGen is a tool that generates interpreters from a higher-level description. I think I read some papers referencing it, but I haven’t tried it myself.

The announcement of SquirrelFish from the WebKit team discusses the reasoning behind a register-based bytecode design. They also link to relevant papers.

If you have more links to good articles on the topic, let me know.